Computing Power Sovereignty: A Global AI Computing Hardware Landscape Report 2025 – Geopolitical Games, Technological Transformation, and Sustainability Challenges

In 2025, AI development has fully transitioned from algorithm-driven "soft power" competition to a "hard power" game anchored by computing hardware. Major global economies clearly recognize that whoever masters the design and supply of advanced AI computing hardware controls the source of productivity in the intelligent era.

Computing Power Sovereignty: A Global AI Computing Hardware Landscape Report 2025 – Geopolitical Games, Technological Transformation, and Sustainability Challenges

Report Date: December 2025 AISOTA.com

Quick Navigation / Table of Contents

- Chapter 1: Core Variables and Paradigm Shift: Annual Industry Overview

- Chapter 2: Global AI Hardware Industry Geographic Distribution and Competitive Landscape

- Chapter 3: The Diversified Evolution of AI Compute Forms and Future Paradigms

- Chapter 4: Mainstream Product Matrix, Market Dynamics, and Supply Chain Analysis (Latest as of December 2025)

- Chapter 5: Application-Oriented Hardware Configuration Guide (2025 Edition)

- Chapter 6: Compute Economics: Costs, Price Trends, and Business Model Innovations

- Chapter 7: Energy Constraints: AI's "Power Wall" and Sustainable Development

- Chapter 8: Frontier Technology Trends and Future Outlook

- Appendix

Chapter 1: Core Variables and Paradigm Shift: Annual Industry Overview

In 2025, AI development has fully transitioned from algorithm-driven "soft power" competition to a "hard power" game anchored by computing hardware. Major global economies clearly recognize that whoever masters the design and supply of advanced AI computing hardware controls the source of productivity in the intelligent era. This year, the strategic status of AI chips and servers is comparable to oil in the industrial age and semiconductors in the information age, becoming a core indicator of comprehensive national power competition among major powers.

At the industry level, the demand for large model training and inference is growing exponentially, driving hardware architecture to iterate at an unprecedented pace. Simultaneously, the intensity of geopolitics, the urgency of energy consumption, and the divergence of technological paths are deeply intertwined in 2025, collectively shaping a new industrial landscape full of tension and uncertainty. This chapter will use the year's most pivotal event as a starting point to analyze this profound transformation reshaping the global balance of technological power.

1.2 In-depth Analysis of the 2025 Landmark Event: The U.S. "Conditional Approval" Strategy for Chip Controls on China

On December 8, 2025, the U.S. Department of Commerce's Bureau of Industry and Security (BIS) (BIS) officially approved NVIDIA to sell its H200 Tensor Core GPU to specific, vetted Chinese customers. This decision was not unconditional market opening but came with an unprecedented stringent clause: NVIDIA must remit 25% of the relevant sales revenue to the U.S. government. This policy also applies to other U.S. chip companies like AMD and Intel selling similar high-end AI chips to China.

Core Details:

- Approval Scope: Limited to the H200 chip based on the previous-generation Hopper architecture. Its newly released Blackwell architecture chips (e.g., B200) and future planned Rubin architecture products remain strictly prohibited for sale to China.

- Customer Vetting: Sales are not to all Chinese companies but only to "approved customers," expected to be primarily a few leading cloud service providers and AI R&D institutions, with ongoing monitoring of their procurement purposes and end-users.

- "Compute Tax": The 25% revenue remittance rate is essentially the U.S. government directly extracting a high "technology licensing fee" or "geopolitical risk premium" from commercial transactions.

Political and Economic Logic:

This policy is a typical "cost internalization" and "interest bundling" strategy.

- Alleviate Industry Pressure: Directly responds to strong demands from the U.S. chip industry, led by NVIDIA, to avoid completely losing share in one of the world's largest semiconductor markets, maintaining sustainable corporate revenue and R&D investment.

- Establish a High Tech Wall: By allowing sales of "previous-generation" products (H200) rather than the most cutting-edge ones (B200), it partially meets China's high-end computing needs while ensuring at least a one-to-two-generation technological gap between the U.S. and China, extending U.S. technological leadership.

- Generate Direct Revenue: Transforms geopolitical competition into direct fiscal income, setting a precedent of "pay-to-pass" in technology control, providing a new tool template for future U.S. technology trade policies.

The shift from "comprehensive blockade" to "technology gap lock-in + profit sharing" marks a fundamental change in U.S. strategy for controlling advanced technology exports to China. The influence of industry lobbying, led by NVIDIA's CEO Jensen Huang, was decisive. The new normal emerging in China is a hybrid computing pattern: "Training with imported chips, inference with domestic chips."

Chapter Conclusion: 2025, marked by the "conditional approval" of H200, heralds a new era for the global AI hardware industry, characterized by geopolitical instrumentalization, politicized commercial logic, regionalized technology paths, and diversified competition dimensions.

Chapter 2: Global AI Hardware Industry Geographic Distribution and Competitive Landscape

2.1 North America: Ecosystem Hegemony and Innovation Hub

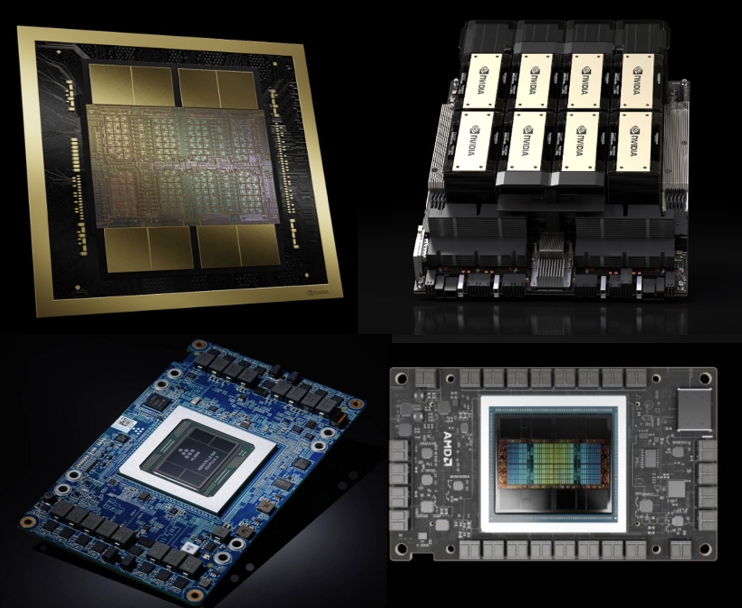

As the absolute core of the global AI hardware industry, North America (especially the Silicon Valley-centric region) remained the hub of ecosystem hegemony and disruptive innovation in 2025. NVIDIA's vertical integration from IP design to cloud services, coupled with the deepening self-developed chip strategies of tech giants like Google, Amazon Web Services, and Microsoft, defines the landscape. Meanwhile, challengers like AMD and Intel are making market offensives.

| Company/Initiative | Key Product/Progress (2025) | Strategy/Impact |

|---|---|---|

| NVIDIA | H200 (approved for China), GB200/GB300 (Blackwell), Planned delivery of 20M Blackwell/Rubin chips over next 5 quarters | Maintains >60% market share via CUDA ecosystem; faces challenges from cloud giants' in-house chips and OpenAI's hardware ambitions. |

| TPU v5e/v5p, TPU v6 (preview), Negotiating multi-billion dollar TPU sales with Meta | Transitioning TPU from internal "weapon" to competitive "commodity"; projected to dent NVIDIA's market share. | |

| Amazon AWS | Trainium3 (3nm), Inferentia2, Over 1M self-developed AI chips deployed | Builds cost advantage (up to 50% lower training cost claimed) via system-level optimization and scale. |

| Microsoft | Maia 200 (delayed to 2026), Exploring custom AI accelerators with Broadcom | Balanced approach between in-house development and multi-source procurement for Azure. |

| AMD | Instinct MI350 series, Targeting performance/supply gaps | Improving ROCm software stack to compete with NVIDIA's CUDA. |

| Intel | Gaudi 3 (focus on inference), "Crescent Island" Data Center GPU (samples 2026H2) | Differentiates on energy efficiency and inference market, promoting liquid-cooled rack designs. |

2.2 East Asia: Manufacturing Base, Terminal Innovation, and Autonomous Breakthrough

East Asia is the undisputed "twin engine" of global AI hardware. It is the world's most advanced semiconductor manufacturing and memory supply "heart." Simultaneously, the massive market centered on China is driving a "full-stack autonomous" breakthrough amidst complex geopolitics.

2.2.1 China: Multi-Core Driven Full-Stack Breakthrough

- Huawei Ascend Ecosystem: Evolved into China's de facto standard "domestic compute base." The Ascend 910B chip and Atlas 900 SuperCluster provide cluster computing power. The ecosystem is expanding rapidly via the MindSpore framework and "all-in-one" machine solutions.

- Pure-Play AI Chip Companies: Companies like Cambricon, Biren Technology, and Moore Threads are pursuing differentiated paths via technology licensing, application-specific chips, and focusing on graphics/multimedia compute.

- Terminal-side NPU Innovation: Chinese smartphone OEMs are in an SoC arms race, with flagship NPU performance exceeding 50 TOPS and moving towards 100 TOPS. Companies like OPPO, Vivo, Xiaomi, and Huawei are deeply involved in NPU IP design and algorithm-hardware co-optimization.

2.2.2 South Korea & Taiwan: The "Manufacturing Heart" of Global AI Hardware

| Company/Region | Key Role/Product | 2025 Status & Bottleneck |

|---|---|---|

| TSMC (Taiwan) | CoWoS Advanced Packaging | The ultimate bottleneck for global AI chip supply. Despite doubling capacity in 2025, it remains "fully booked." |

| Samsung (Korea) | HBM3e/HBM4 Memory, Advanced Packaging (I-Cube) | Competing fiercely with SK Hynix on HBM yield and capacity. Developing own packaging to challenge TSMC. |

| SK Hynix (Korea) | HBM3e/HBM4 Memory | Currently leading in HBM3e yield and market share. Deep co-design with chipmakers like NVIDIA. |

2.3 Europe: Niche Focus and Green Computing Pioneer

Europe's strategy focuses on areas of inherent advantage or strategic importance, avoiding direct competition in consumer AI chips.

- Technology Sovereignty: The European Processor Initiative (EPI) and SiPearl's Rhea processor aim to power European exascale supercomputers (e.g., JUPITER, LEONARDO).

- Inherent Advantages in Equipment & Automotive: ASML holds the "ultimate bottleneck" position with EUV lithography machines. Companies like Infineon, NXP, and STMicroelectronics dominate automotive chips with functional safety and system integration expertise.

- "Green AI" Leadership: The EU is setting stringent ICT energy efficiency regulations. European companies like Aspera (Sweden) and Viessmann (Germany) are leaders in liquid cooling technology, which is becoming mandatory for high-density AI clusters.

Chapter 3: The Diversified Evolution of AI Compute Forms and Future Paradigms

3.1 Cloud Centralized Compute: From General Cards to Superclusters

The paradigm is shifting from purchasing discrete accelerators to deploying integrated, software-defined system-level products—"compute clusters."

3.1.1 "Compute Cluster as a Product": NVIDIA DGX SuperPOD vs. Huawei Atlas SuperCluster

The integration unit has evolved from servers/racks to "Super Nodes." Huawei's Atlas 900 A3 SuperPoD integrates 384 Ascend NPUs as a single logical entity, significantly improving inter-chip bandwidth and reducing latency. Both NVIDIA and Huawei offer scalable, modular cluster "building blocks." Huawei has published a roadmap for future SuperPoDs targeting up to 30 EFLOPS FP8 compute. Competition is extending to defining open standards for cluster interconnects.

3.1.2 Challenges of Ultra-Large-Scale AI Compute Centers

Building and operating 10,000+ card clusters faces major challenges:

- Technical: Extreme power efficiency and cooling (liquid cooling is now mandatory), scheduling heterogeneous compute resources, and full-stack hardware-software co-optimization.

- Operational: Risk of "digital unfinished buildings" due to severe supply-demand mismatch. Reports indicate average utilization of AI compute centers in China is only around 30%, with some facilities built without local demand.

Solutions: Building a national "compute network" for unified scheduling, activating demand via "compute vouchers," and implementing stricter project approval mechanisms.

3.2 Edge & Terminal Compute: From Auxiliary to Mainstream

Driven by model miniaturization, privacy needs, and hardware specialization, edge compute is becoming a primary platform for real-time, intelligent applications.

| Core Carrier | 2025 Status & Key Features | Industry Impact |

|---|---|---|

| AI PC | >20% of notebook sales; NPU >90 TOPS; Evolving into "Personal Super Agent" with proactive services. | Standards battle (Copilot+ PC vs. Arm/Apple). Ecosystem still nascent. Domestic variants emerging in China. |

| AI Smartphone | NPU >100 TOPS; Running 3B-8B parameter multimodal models locally; Enabling "one-command complex operations." | Competition shifted to on-device multimodality and agent ecosystem building. Lack of killer apps remains a challenge. |

| AI/AR Glasses | Evolving from media accessory to next-gen computing platform (e.g., XREAL's glasses with spatial compute chip). | Chinese companies lead consumer AR market. Competition moving to underlying chips and optics. |

| Vehicle Central Computer | "Cockpit-Drive Integration" with chips like NVIDIA DRIVE Thor (2000 TOPS); Central Compute + Zone Control architecture. | Transforming automotive supply chain; enabling true intelligent cockpit with generative AI. |

3.3 Compute Networks and Distributed Sharing: The Next-Generation Infrastructure Blueprint

To address uneven geographic distribution and explosive demand, the focus is shifting to efficiently connecting and sharing distributed compute resources.

- National Integrated Compute Network (China): The "East Data West Computing" project aims to schedule compute-intensive, latency-insensitive tasks to western regions with renewable energy. A national scheduling platform is being built, and "compute vouchers" are used to stimulate demand.

- Blockchain-Driven Shared Platforms: Platforms like Akash Network and Render Network are moving towards compliance and enterprise-grade applications for AI inference and rendering tasks, though challenges remain in performance stability and toolchain compatibility.

- Future Outlook - DePIN: The concept of Decentralized Physical Infrastructure Networks (DePIN) could mobilize distributed individual hardware into a global compute pool via token incentives, though economic sustainability is a key challenge.

Chapter 4: Mainstream Product Matrix, Market Dynamics, and Supply Chain Analysis (Latest as of December 2025)

4.1 Cloud Training Chips: Performance, Price, and Availability Matrix

The market for cloud training chips directly reflects the intensity of global technological competition and industrial strategy. U.S. policy is the dominant factor.

| Product Model | Core Architecture | Key Perf. (FP16/BF16) | Supply Status to China | Market Price (Est.) | Availability Analysis |

|---|---|---|---|---|---|

| NVIDIA H200 | Hopper | ~1979 TFLOPS, 141GB HBM3e | Conditional Approval | ~$250,000 - $350,000 /card* | Extremely limited, only for approved customers. Lead time >6 months. |

| NVIDIA H20 | Hopper (China-specific) | ~148 TFLOPS, 96GB HBM3 | Normal Supply | ~$25,000 - $35,000 /card | Relatively available. Performance is only ~7% of H200. |

| AMD MI300X | CDNA 3 | ~2048 TFLOPS, 192GB HBM3 | Strictly Banned | ~$40,000 - $50,000 /card (Int'l) | No legal channel to China. High legal/supply risk. |

| AMD MI325X | CDNA 3+ | +30% vs MI300X, HBM3e | Strictly Banned | TBD | Completely isolated from China market. |

*Price includes the 25% "license fee" to U.S. government, making it a non-market-driven "political price."

4.1.2 Domestic Mainstream Chips: Performance and Supply

| Product Model | Core Architecture | Key Performance | Software Ecosystem | Supply & Price |

|---|---|---|---|---|

| Huawei Ascend 910B | Da Vinci | ~320 TFLOPS (FP16) | MindSpore native, PyTorch adaptation improving | Tight supply, sold as servers. An 8-card server ~$500,000 - $700,000. Prioritized for national projects. |

| Hygon DCU (Deep Compute) | CDNA-like | Competitive with last-gen int'l products | Compatible with ROCm, good PyTorch support | Relatively autonomous supply via licensed IP. Competitive pricing in gov't cloud & research sectors. |

| Cambricon MLU590 | MLUarch | Claims mainstream training performance | Cambricon software platform | Focuses on top internet/finance clients. Lower visibility than Ascend/Hygon. |

Market Insight: The "hybrid compute" pattern is solidified: Ascend is the primary choice for training within China, while inference sees more diverse options including domestic and sanctioned international cards.

4.2 Cloud Inference & Edge Computing Hardware

| Product Model | Positioning | Typical Use Case | Price Reference (China) |

|---|---|---|---|

| NVIDIA L40S | General Inference/Rendering | Real-time LLM inference, AI image/video gen | ~$8,000 - $11,000 /card |

| NVIDIA L20 | China-specific Inference | Online inference, content moderation in China | ~$4,000 - $7,000 /card |

| Huawei Ascend 310P | High-Density Inference | Smart city camera analytics, OCR, cloud phone | Significantly lower cost per card than int'l equivalents |

Edge AI boxes and modules are highly customized. The trend is towards "out-of-the-box" solutions with pre-installed algorithms and multi-sensor fusion, with cost being a decisive factor.

4.3 Terminal AI Chips: Smartphone, PC & IoT

4.3.1 Smartphone SoC NPU Performance Ranking & On-Device Model Support

| Platform/Vendor | Representative SoC | NPU Peak (TOPS) | On-Device Model Support (2025) |

|---|---|---|---|

| Qualcomm | Snapdragon 8 Gen 4 | > 100 | 7B parameter models, multimodal |

| MediaTek | Dimensity 9400 | > 90 | 5-7B models, optimized with Alibaba's Qwen |

| Apple | A18 Pro / M4 | Neural Engine (16/18-core) | Apple Intelligence, 3B specialized models |

| Huawei HiSilicon | Kirin 9010 | Da Vinci NPU | Lightweight Pangu model, integrated with HarmonyOS |

Key Progress: Top on-device models are now compressed to 3B-8B parameters, enabling fast text generation, image understanding, and simple video summarization on phone NPUs. "Agent" features (AI operating apps) are a key demo focus.

4.3.2 AI PC NPU Standard War and Ecosystem

In 2025, AI PCs moved from concept to volume, but the ecosystem is still nascent.

- Standards Battle: The Microsoft & Intel promoted "Copilot+ PC" standard requires 40+ TOPS NPU. However, Qualcomm (Arm) and Apple (M-series) challenge the x86 dominance with their high-performance NPUs.

- Ecosystem Bottleneck: Killer AI applications for PCs are scarce. Mainstream app AI features (Office, Adobe) still rely on the cloud, leading to low NPU utilization.

- China Market: Domestic PC makers are promoting AI PCs based on domestic CPUs (e.g., Phytium) + NPUs, adapting them with local LLMs for government/finance use.

4.4 Critical Supply Chain Analysis

| Supply Chain Node | Competitive Landscape | 2025 Status & Bottleneck | Geopolitical Impact |

|---|---|---|---|

| Advanced Process (3nm/2nm) | TSMC dominance. Samsung, Intel competing. | Capacity fully booked by Apple, NVIDIA, etc. Bookings extend to 2026-27. | Chinese designers (e.g., HiSilicon) cannot access leading nodes, forcing architectural innovation (Chiplet) on mature nodes. |

| HBM3e / HBM4 Memory | SK Hynix lead, Samsung chasing, Micron following. | Severe undersupply. HBM cost can be >50% of total AI chip cost. Shortage expected into 2026. | N/A |

| Advanced Packaging (CoWoS etc.) | TSMC CoWoS is bottleneck. Intel Foveros, Samsung I-Cube competing. | Biggest bottleneck limiting final AI chip output. TSMC expanding aggressively but still insufficient. | Strategic alliances for capacity access are crucial. |

Chapter 5: Application-Oriented Hardware Configuration Guide (2025 Edition)

This chapter provides practical hardware configuration strategies for AI participants of different scales and needs, based on the latest market conditions.

5.1 National-Level / Hyper-Scale Enterprises: 10,000+ Card AI Compute Center Planning

Core Principle: Plan for a "sustainable compute factory," not just hardware procurement. Focus on effective utilization, performance-per-watt, and total cost of ownership (TCO).

Architecture Strategy: A "primary-secondary" hybrid architecture is pragmatic.

- Core Training Cluster (domestic focus): Invest >70% CAPEX in proven domestic clusters (e.g., Huawei Atlas SuperCluster) for core model training/finetuning, ensuring autonomy.

- Frontier Exploration Cluster (supplemented by International): Procure/lease a smaller-scale international flagship cluster (e.g., hundreds of NVIDIA cards) for algorithm validation and international open-source model research. Acts as a "technology radar."

- Unified Scheduler: Use advanced cluster management software to pool and schedule resources across both architectures.

Key Operational Considerations: Liquid cooling (target PUE <1.2) is mandatory. Talent for operating large clusters is critical and costly.

5.2 Mid-Sized Enterprises / AI Unicorns: 100+ Card Private Cluster

These firms have clear AI product roadmaps and stable compute needs but are capital-sensitive.

| Consideration | International Option (e.g., NVIDIA DGX Pod) | Domestic Option (e.g., Huawei Atlas 800) | Analysis & Recommendation |

|---|---|---|---|

| Performance | High, predictable | Catching up, close in specific optimizations | If business relies heavily on int'l ecosystem (Stable Diffusion, LLaMA), int'l option offers higher dev efficiency. |

| Cost & Supply Security | Very high cost, supply chain volatility | Lower TCO, faster service response, secure supply | Domestic option has clear cost and security advantages. |

| Software Ecosystem | Best (CUDA) | Improving rapidly (MindSpore, PyTorch compatibility) | Assess team's tech stack. If deep in PyTorch and willing to adapt, domestic is viable. |

| Long-term Risk | High geopolitical risk, future upgrades uncertain | Clear policy support, roadmap | Compute availability over next 24 months is a key decision factor. |

Financing Models: Equipment leasing and "Compute-as-a-Service" long-term contracts are mainstream to reduce upfront CAPEX.

Recommended Strategy: "Domestic base + Cloud-based international compute for elastic supplementation." Build a domestic卡-based cluster for daily work (70%+ load), and use cloud services for peak training needs.

5.3 Small Teams & Startups: Cost-Benefit Analysis

Core principle: Maximize flexibility, avoid asset sink.

| Option | Typical Setup | Upfront/Deposit | Monthly Cost |

|---|---|---|---|

| Build Small Server | 8x NVIDIA RTX 4090 D or domestic cards | $30,000 - $70,000 | Electricity, maintenance labor |

| Cloud Compute On-Demand | Hourly rental of H800/A100 instances | ~$0 | Can reach tens of thousands at high load |

| Cloud Long-Term Contract | Yearly/monthly reserved instances | 1-3 months deposit | 30%-50% lower than on-demand |

| Hybrid Cloud | Local small server + multi-cloud elastic | $15,000 - $30,000 (local) | On-demand cost for elastic part |

Decision Framework: Calculate the break-even point between self-build (3-year TCO) and 3-year cloud rental. For most startups, a "long-term contract + on-demand burst" cloud model is safer. Also consider domestic cloud compute (e.g., Alibaba Cloud, Huawei Cloud Ascend instances) which are 20%-40% cheaper than international card instances.

5.4 Research Institutes & University Labs

Need to balance cutting-edge research exploration with stable teaching lab needs.

Strategy: Build a "pyramid" compute resource pool.

- Top (~10%): Small high-end research cluster (16-32 high-end cards) for professors/Ph.D. projects.

- Middle (~30%): Medium general-purpose cluster for Master's/high-level course projects.

- Base (~60%): Large-scale desktop workstation lab or VM cluster for undergraduate courses.

All resources should be managed via a unified portal and scheduler (e.g., Slurm) with internal "credits" for fair allocation.

5.5 Individual Developers & Pro Creators

Market Benchmark:

- Performance King: NVIDIA GeForce RTX 4090 D (24GB). ~$1,700 - $2,100. Can run quantized 70B LLMs locally.

- Best Value: NVIDIA GeForce RTX 4080 SUPER (16GB). ~60% the price of 4090 D, handles most AI painting and medium model finetuning.

- Pro Entry: NVIDIA RTX 2000 Ada (12GB, ECC memory). Good for stable long runs.

Recommended High-Value Config (Dec 2025): CPU: AMD Ryzen 7 7800X3D / Intel i7-14700K; GPU: RTX 4080 SUPER; RAM: 64GB DDR5; Storage: 2TB NVMe PCIe 4.0 SSD; PSU: 850W Gold+. Total ~$2,000 - $2,800.

Important Note: VRAM capacity is king for local AI. For development within a domestic ecosystem, machines with domestic GPUs like Moore Threads MTT S80 are an option, but their pure-AI development ecosystem is still maturing.

Chapter 6: Compute Economics: Costs, Price Trends, and Business Model Innovations

6.1 Historical Price Evolution

- Enlightenment Era (2012-2016): Compute price = gaming GPU market price. A cheap "byproduct."

- Industrialization Era (2017-2020): Dedicated AI cards (Tesla V100) emerge. Cloud GPU rental starts. Pricing: hardware depreciation + electricity + profit.

- "Brute Force Compute" Era (2021-2024): Severe shortage. Prices detach from cost, driven by scarcity and "strategic premium." Black market prices 2-3x MSRP.

- Game & Restructure Era (2025-): "Political pricing." Price for high-end chips to China = manufacturing cost + NVIDIA ecosystem premium + U.S. govt license fee + supply chain risk premium. Markets split.

6.2 2025 Cost Breakdown

The Total Cost of Ownership (TCO) iceberg: Hardware CAPEX (~40-50%) is the tip; Operational OPEX (~50-60%) is the larger, submerged part.

| Cost Category | Components | Notes & Trend |

|---|---|---|

| Hardware (CAPEX) | Core AI chip, servers, interconnect (InfiniBand, NVLink switches, optics) | Interconnect can be 30%+ of hardware cost in superclusters. |

| Electricity (OPEX) | Power for IT equipment | Largest OPEX item. Can be >40% of 3-year TCO in high-electricity-cost regions. |

| Cooling (OPEX) | Liquid cooling systems, coolant, maintenance | Mandatory for high-density AI. Increases CAPEX but lowers PUE and long-term electricity cost. |

| Operations & Labor (OPEX) | Data center space, network, repairs, expert engineers | Experts for optimizing 10,000-card clusters can command $150,000+ salaries. |

Key Conclusion: Decision-makers must shift from "procurement cost thinking" to "full lifecycle TCO thinking."

6.3 Future Cost Curve: "Moore's Law" vs. "Huang's Law"

The future cost curve will be shaped by the tug-of-war between Moore's Law (slowing) and Huang's Law (AI compute doubles every 2 years via system innovation).

- Short-term (1-2 years): High absolute prices due to supply chain bottlenecks, but effective cost per task may slowly decline via algorithmic advances and domestic competition. A "price up, efficiency up" paradox.

- Mid-term (3-5 years): Energy cost becomes the dominant variable, exceeding 50% of TCO. Location of compute facilities will be dictated by access to cheap, green, stable power (nuclear, hydro). Jensen Huang's "AI needs nuclear" statement background is here.

- Long-term: Software and algorithms will define the final cost frontier as hardware hits physical and economic limits (electricity bills).

6.4 Business Model Innovations: Financialization of Compute

| Business Model | Core Logic | 2025 Examples | Value & Risk |

|---|---|---|---|

| Compute Leasing | Separate use from ownership | Cloud on-demand/reserved instances; Hardware subscription (e.g., DGX Pod monthly fee) | Value: Lowers barrier, provides elasticity. Risk: Long-term cost may exceed purchase; vendor lock-in. |

| Compute Bank | "Deposit" and "Lend" idle compute | Explored by some national compute scheduling platforms in China | Value: Activates idle compute. Risk: Hard to standardize heterogeneous compute quality; complex cross-entity settlement. |

| Compute Futures & Derivatives | Hedge future price risk | Forward contracts with cloud providers; CME exploring futures contracts | Value: Locks in cost/income. Risk: Compute is non-standardized; immature market; regulatory blank. |

| DePIN + Compute Mining | Token-incentivized decentralized market | Render Network, Akash Network | Value: Theoretically aggregates global碎片化 compute. Risk: Unstable quality; token volatility; not suited for enterprise-grade needs. |

Core Challenge for Financialization: Standardization of a "compute unit" that accounts for chip type, memory, interconnect, power, etc. This remains a major obstacle.

Chapter 7: Energy Constraints: AI's "Power Wall" and Sustainable Development

In 2025, the "power wall" has evolved from a technical challenge to a core issue of industry survival and sustainable development.

7.1 The Alarm: AI Data Center Energy Consumption & Grid Pressure

According to the International Energy Agency (IEA), global data center electricity demand could double in three years, primarily driven by AI. A single H200 chip consumes over 700W; a fully loaded rack can reach 40-100kW. In the U.S., planned data center demand accounts for ~20% of the nation's expected additional power generation over the next five years. Countries like Ireland and Singapore have paused new data center approvals due to grid capacity.

7.2 Hardware-Level Response: The Race for Performance-per-Watt

The focus has shifted from peak TFLOPS to TFLOPS/Watt.

- Architecture Innovation: Specialized units like NVIDIA's Transformer Engine and Google/Amazon's matrix units sacrifice generality for extreme efficiency.

- Advanced Process & Packaging: 3nm/2nm and Chiplet with advanced packaging (CoWoS) reduce power, especially from data movement (30%+ of chip power).

- Software-Defined Power Management: Dynamic Voltage and Frequency Scaling (DVFS) and low-power states are standard in cloud AI instances.

7.3 Facility-Level Revolution: Liquid Cooling Goes from Optional to Mandatory

With rack power densities surpassing 40kW, air cooling is obsolete. Liquid cooling (cold plate or immersion) is now a mandatory standard for hyperscale AI data centers.

- Cold Plate: Current mainstream. Liquid flows through plates attached to chips.

- Immersion: Entire server submerged in dielectric fluid. Highest efficiency (PUE ~1.02), higher upfront cost and complexity, moving towards mainstream.

European companies (Aspera, Viessmann) lead, but Chinese vendors (Huawei, Sugon, Lenovo) are catching up fast.

7.4 Strategic-Level Layout: Tech Giants Enter the Energy Arena

Tech giants realize future AI's bottleneck is energy, not algorithms.

7.4.1 The Background of Jensen Huang's "AI Needs Nuclear" Thesis

NVIDIA CEO Jensen Huang's advocacy for nuclear (especially fusion) stems from the index correlation between compute growth and energy demand. Intermittent renewables cannot meet 24/7 high-load needs, and grid expansion is slow. New-generation small modular reactors (SMRs) and future fusion offer dense, stable, zero-carbon power perfect for AI data centers.

7.4.2 Direct Investments by Microsoft, Google, etc.

- Microsoft: Most aggressive. Signed long-term Power Purchase Agreements (PPAs) with U.S. nuclear plants worth over $2B. Major investor in fusion startups like Helion Energy.

- Google: Largest corporate buyer of renewables. Exploring "24/7 carbon-free energy" solutions, including geothermal and next-gen storage, and evaluating nuclear.

- Amazon: Building solar/wind farms near data centers and investing in green hydrogen for long-duration storage.

They are transitioning from energy consumers to energy strategic investors and shapers of new power systems.

7.5 Green AI: Policy, Standards, and the Blueprint for Sustainable Compute

Systematic solutions are needed beyond individual company actions.

- Policy Drive: EU's AI Act and Corporate Sustainability Reporting Directive (CSRD) require disclosure of model energy consumption and carbon footprint. China's "East Data West Computing"project channels compute to renewable-rich west. More regions will tie compute approvals to strict PUE and carbon intensity (CUE) targets.

- Standard Setting: Industry is pushing "Green Compute" standards: "Carbon efficiency per effective computing power output," full lifecycle assessment, and green procurement criteria.

- Future Blueprint - Carbon-Negative Compute Infrastructure: An integrated "Energy-Compute-Carbon Removal" complex built next to nuclear power plant or large geothermal/hydro plants, using waste heat for district heating or carbon capture, achieving net-negative carbon output for compute.

Chapter 8: Frontier Technology Trends and Future Outlook

8.1 Chip Architecture Revolution

| Technology | Core Promise | 2025 Progress Stage | Key Challenges |

|---|---|---|---|

| Compute-in-Memory (CiM) | Eliminate "memory wall," 10-100x energy efficiency gain | Research/early prototyping (based on RRAM/MRAM). "Near-memory" (HBM stacking) is mainstream phase 1. | Precision, reliability, mass-manufacturing consistency for analog CiM. |

| Photonics Computing | Ultra-high speed (THz), ultra-low latency & power for linear algebra | Early commercialization (e.g., Lightmatter Envise/Passage chips). Used as co-processors. | Cost of large-scale photonic integration, process maturity, compatibility with electronic ecosystem. |

| Quantum-Assisted AI | Speed up specific ML subproblems (optimization, sampling) | Very early R&D. Quantum cloud services offer ML toolkits. Show promise in materials simulation, small-scale optimization. | Decades from practical large-scale AI application. Value is in early hardware/algorithm reserve. |

8.2 Advanced Interconnect Technologies

| Technology | Core Goal | 2025 Status & Impact |

|---|---|---|

| NVIDIA NVLink (Proprietary) | Ultra-fast, low-latency GPU-to-GPU interconnect | Gen 5 enables rack-scale unified memory (e.g., in GB200 NVL72), crucial for large-scale training. |

| CXL (Compute Express Link) (Open) | Efficient CPU-accelerator & memory pooling interconnect | CXL 3.1 is mainstream. Enables memory pooling and efficient access to heterogeneous accelerators. |

| UCIe (Universal Chiplet Interconnect) (Open) | Standardize die-to-die interconnect for Chiplets | Rapid adoption in 2025. Foundation for a vibrant Chiplet ecosystem, breaking cost/yield limits of monolithic design. |

Combined Impact: These technologies shift system architecture from "CPU-centric satellite" to "memory/interconnect-centric peer-to-peer fabric".

8.3 Materials & Device Innovation

- CFET (Complementary FET): Key to scaling beyond 1nm. Stacks N and P transistors vertically. Intel, TSMC, Samsung showed progress in 2025; early mass production expected 2028-2030.

- 2D Materials (e.g., MoS₂): Potential ultra-thin channel material when silicon scales to atomic limits. Research focus in 2025 is on integration with silicon processes and contact resistance. IMEC exploring 2D-material-based CFET.

- New Memory: RRAM/MRAM for CiM; FeRAM (fast, low-power, high endurance) for cache/embedded memory.

8.4 System-Level Co-Design

The frontier of hardware innovation has risen to the vertical integration of algorithm, compiler, system architecture, and chip design.

- Paradigm Shift: From "design hardware first, then write algorithms" to "co-design hardware and software around target workloads/algorithms." Examples: NVIDIA Transformer Engine, Google TPU-TensorFlow optimization.

- Key Enablers: High-Level Synthesis for agile chip design; Using ML to design chips (Google did this for TPU); Reconfigurable architectures (FPGA, CGRA).

- Ultimate Goal: Domain-Specific Architecture (DSA) – designing optimal software-hardware stacks from scratch for domains like autonomous driving, scientific computing, achieving orders-of-magnitude efficiency gains. This is the deep logic behind tech giants' in-house chips.

8.5 2026-2030 Outlook: Five Certain Trends & Three Potential Black Swans

Five Certain Trends:

- Hybrid Heterogeneous Compute as Absolute Mainstream: CPU+GPU+NPU+DPU+FPGA interconnected via CXL/UCIe, smartly scheduled by software.

- From "Compute Center" to "Compute-Energy Complex": Large AI data centers deeply integrate nuclear/fusion, energy storage, waste heat utilization.

- Deepening of Geopolitical Tech Spheres: Parallel competing "tech hemispheres" based on U.S. CUDA, China's Ascend, and Europe's RISC-V/open-source ecosystems.

- Democratization & Automation of AI Hardware Design: Cloud-based chip design tools and Chiplet ecosystem enable more firms to定制 cost-effective AI accelerators.

- Sustainability as a Hard KPI: Carbon footprint becomes a core procurement/evaluation metric alongside performance and price, driving green innovation across the chain.

Three Potential Black Swan Events:

- Unexpected Breakthrough in Disruptive Compute Principle: If Compute-in-Memory or Photonics achieves rapid, unexpected progress in accuracy, cost, and manufacturing, it could "disrupt" existing silicon-logic giants.

- Severe Disruption of Critical Mineral Supply Chains: Supply chains for rare earth, gallium, germanium used in chips, motors, batteries are highly concentrated. Geopolitical conflict or embargo could halt global AI hardware production.

- Radical Implementation of Global AI Energy Efficiency Regulation: If UN or major economies impose extreme, retroactive energy consumption and carbon emission rules for AI compute, it could instantly raise operational costs, forcing massive tech route adjustments and disuse of high-energy infrastructure.

Appendix

Appendix 1: Global Major AI Chip Vendors & Product Lines (2025 Update)

| Category | Vendor | Key AI Product Lines (2025) | Key Architecture/Ecosystem |

|---|---|---|---|

| International Cloud Training | NVIDIA | H200, H20 (China), B200/GB200 (Blackwell) | Hopper, Blackwell, CUDA |

| AMD | MI325X, MI350X (Instinct) | CDNA 3/3+, ROCm | |

| Intel | Gaudi 3, Falcon Shores (Future) | Gaudi, OpenVINO | |

| International Cloud/Edge Inference | NVIDIA | L40S, L20 (China), Orin (Edge) | Ada Lovelace, CUDA |

| Amazon AWS | Trainium3, Inferentia2 | In-house, Neuron SDK | |

| Google Cloud | TPU v5e/v5p, TPU v6 (preview) | In-house, TensorFlow/JAX | |

| Domestic Cloud (China) | Huawei | Ascend 910B, Ascend 310P | Da Vinci, MindSpore |

| Hygon | Deep Compute Series DCU | CDNA-like, ROCm compatible | |

| Cambricon | MLU590 | MLUarch, Cambricon software platform | |

| Biren Technology | BR100, BR104 | In-house Biren architecture | |

| Terminal & Edge | Qualcomm | Snapdragon 8 Gen 4 (Phone), Snapdragon X Elite (PC) | Hexagon NPU, AI Stack |

| MediaTek | Dimensity 9400 | APU, NeuroPilot | |

| Apple | A18 Pro, M4 | Neural Engine, Core ML | |

| Huawei HiSilicon | Kirin 9010 | Da Vinci NPU, HarmonyOS |

Appendix 2: Key Terminology Glossary

- TFLOPS/TOPS: Tera Floating-Point Operations Per Second / Tera Operations Per Second. TFLOPS are commonly used for training; TOPS are commonly used for inference.

- HBM (High-Bandwidth Memory): Memory stacked using 3D and Through-Silicon Via (TSV) tech, offering far greater bandwidth than GDDR. Critical for AI chips.

- CoWoS (Chip-on-Wafer-on-Substrate): TSMC's 2.5D advanced packaging tech to integrate logic die and HBM. A key bottleneck for high-end AI chip manufacturing.

- TCO (Total Cost of Ownership): Full lifecycle cost including hardware, power, cooling, ops, space, labor.

- PUE (Power Usage Effectiveness): Total data center energy / IT equipment energy. Closer to 1 means higher efficiency.

- Chiplet: Small functional chips that are integrated via advanced packaging to form a larger system. Improves yield, reduce costs, enables heterogeneous integration.

- NPU (Neural Processing Unit): Processor core specialized for AI algorithms, high energy efficiency.

- DePIN (Decentralized Physical Infrastructure Networks): Network model using token incentives to build/maintain distributed physical infrastructure (e.g., compute, storage).

Appendix 3: References & Data Sources

This report synthesizes public information as of December 2025. Major sources include:

- Official press releases, technical white papers, and earnings call transcripts from NVIDIA, AMD, Intel, Huawei, Google, Microsoft, Amazon, etc.

- Industry analyst reports: Gartner, IDC, TrendForce, Counterpoint.

- Professional tech media & analysis: The Information, SemiAnalysis, AnandTech, Tom's Hardware, and major Chinese tech media.

- Academic conference and industry summit presentations: NVIDIA GTC, Huawei Connect, Google I/O, Microsoft Build, and cutting-edge research disclosed at top semiconductor conferences (ISSCC, VLSI, Hot Chips).

- Government & international organization reports: U.S. Department of Commerce BIS announcements, International Energy Agency (IEA) reports, EU policy documents.

Report compiled and analyzed by AISOTA.com. All monetary figures are in USD unless otherwise specified in original context. This document is for informational purposes only.

This article was written by the author with the assistance of artificial intelligence (such as outlining, draft generation, and improving readability), and the final content was fully fact-checked and reviewed by the author.